Spider concepts

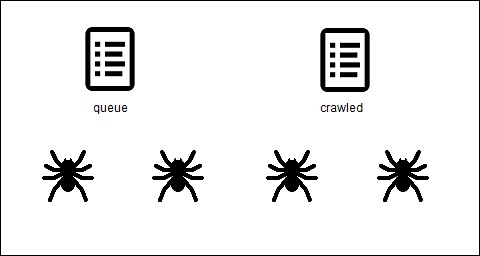

Let’s now briefly discuss how our spider will actually work. The spider will grab a link that needs to be crawled from the queue file. It will connect to that page, take all of the HTML of that page, and send it to LinkFinder class that will parse through the HTML and get all the links. These links will then be added to the queue file (if the link is not already there). Once the spider is done crawling the page, it will delete its link from the queue file and store it in the crawled file.

Because we will have multiple spiders, we need to make sure that each of them access the same queue and crawled files: